This year we’re documenting a series of new and as-yet undocumented VM detection tricks. These detection tricks will be focused on 64-bit Windows 10 or Windows Server 2019 guests, targeting a variety of VM platforms.

In the first article we investigated the use of physical memory maps as a way of distinguishing between real and virtualized hardware. While hardware discrepancies are a rich source of VM detection tricks, it is also worth looking at the guest-side software used by virtualization solutions.

This month’s trick was discovered accidentally, while researching something unrelated, but it is actually useful for much more than just VM detection. You can use this trick to stealthily discover the presence of almost any driver on the system you’re executing code on, using only a common multi-purpose API, without ever interacting with the driver service or driver files. Not only that, but it also leaks the addresses of driver code in kernel memory space, which is useful in driver exploitation scenarios. Proof of concept source code can be found here.

The trick, put simply, involves detecting kernel mode drivers by the threads that they create. Since drivers may create a certain number of threads, with varying predictable properties, these attributes can be used to fingerprint them and build heuristics that are useful for detection.

While looking through various system information in Process Explorer, I noticed that thread information for the System process (PID 4) was shown even when it was run without administrative privileges. This information includes the starting address of the thread, which Process Explorer helpfully translates to a name and offset using symbols.

The system process contains kernel and driver threads. On a Hyper-V guest I was able to see a large number of threads from the vmbus.sys driver.

Out of curiosity, I wondered exactly how many threads were started at each address within that driver. The numbers came out as follows:

- vmbus.sys+0x4230: 1

- vmbus.sys+0x4410: 1

- vmbus.sys+0x5130: 336

The large number of threads at that last address is interesting. Take a look at the number of virtual CPU cores that this VM happens to have assigned to it:

If you multiply 112 by 3 you get 336, which feels too coincidental to be by accident. The vmbus.sys driver seems to spawn three threads per CPU core, all at the same starting address, plus two other threads with addresses a little lower down. To confirm this, I powered the VM down and changed the number of CPUs it was given, then tried again. The thread count at this offset was always three times the number of logical CPUs.

To make sure I fully understood the driver behavior, I threw vmbus.sys into Ghidra. The imports section of the driver showed that both PsCreateSystemThread and PsCreateSystemThreadEx were imported, either of which could be used to start threads. The first API is documented, but the Ex variant is not. It is, however, mentioned in the Xbox OpenXDK code, and the signature should be compatible. By looking through the call sites I could see that this thread creation behavior was coming from AwInitializeSingleQueue. After some quick reverse engineering I was presented with this:

This function creates at most three threads per queue, as per the condition on line 43. The thread start points to AwWorkerThread. This queue creation function is exclusively called by AwInitializeQueues, which I also reverse engineered:

This function queries the active processor count, then creates a queue for each processor. This is why we see three driver threads per logical processor.

Detecting these driver threads programmatically involves some research. We need to figure out how the system thread information is being read by Process Explorer, use that same approach to extract the starting address information, then utilize that in a signature-based approach to detecting the VM driver.

The NtQuerySystemInformation API is commonly used to retrieve information about the system’s configuration, capabilities, its processes and threads, handles, and all sorts of other details. It is partially documented and generally not recommended for use in applications, but it is still not unusual to see this API being used in legitimate programs.

The API is multi-functional in terms of the types of information you can retrieve with it. Each type is called an information class. Generally speaking, NtQuerySystemInformation is used by passing an information class that you would like to retrieve information about, along with a buffer that receives that information. The data structure returned in the buffer is specific to the information class that was requested. There are hundreds of information classes and many of them are undocumented. Even for documented information classes, many of the data structures are partially opaque and undocumented. Luckily for people like me, reverse engineers have put a lot of effort into documenting these information classes and their data structures. One of the best resources on this matter is Geoff Chappell’s undocumented Windows internals website.

One of the most common use-cases for NtQuerySystemInformation is to get a list of the processes running on the system, using the SystemProcessInformation information class. A developer might wish to retrieve such a list for any number of benign reasons, so it is rarely ever considered to be a red flag in terms of application behavior. The official documentation on MSDN describes this particular information class as follows:

Returns an array of

SYSTEM_PROCESS_INFORMATIONstructures, one for each process running in the system.These structures contain information about the resource usage of each process, including the number of threads and handles used by the process, the peak page-file usage, and the number of memory pages that the process has allocated.

They do not link to the structure definition and it is generally undocumented. A partial structure definition can, however, be found in the Microsoft Platform SDK header files:

typedef struct _SYSTEM_PROCESS_INFORMATION {

ULONG NextEntryOffset;

BYTE Reserved1[52];

PVOID Reserved2[3];

HANDLE UniqueProcessId;

PVOID Reserved3;

ULONG HandleCount;

BYTE Reserved4[4];

PVOID Reserved5[11];

SIZE_T PeakPagefileUsage;

SIZE_T PrivatePageCount;

LARGE_INTEGER Reserved6[6];

} SYSTEM_PROCESS_INFORMATION, *PSYSTEM_PROCESS_INFORMATION;

There are a large number of redacted (or “opaque”) fields here, which isn’t much fun if we’re looking for juicy information that might lead to a VM detection trick. One thing that does stand out, though, is that there’s a NextEntryOffset field. Such fields are usually only used in association with arrays of variable-length structures, but nothing in the structure definition implies that this structure is variable in length. This leads to three possible conclusions: that there are variable-length fields later in the structure that are not shown here, that there are other structures in the buffer between each SYSTEM_PROCESS_INFORMATION structure, or both.

Geoff Chappell’s reverse engineering notes have the following to say:

The information buffer is to receive a collection of irregularly spaced

SYSTEM_PROCESS_INFORMATIONstructures, one per process. The spacing is irregular because each such structure can be followed by varying numbers of other fixed-size structures and by variable-size data too: an array ofSYSTEM_THREAD_INFORMATIONstructures, one for each of the process’s threads; aSYSTEM_PROCESS_INFORMATION_EXTENSIONstructure; and the process’s name.

This is useful to know – not only do we get process information from this API, but we also get thread information! This explains why the length is variable, too: there are other structures between each process information structure.

The overall layout of the returned data for the SystemProcessInformation class looks like this:

Geoff has meticulously documented the SYSTEM_PROCESS_INFORMATION and SYSTEM_THREAD_INFORMATION structures for both 32-bit and 64-bit versions of Windows going all the way back to Windows 3.1. This saved me a ton of reverse engineering work. Thanks, Geoff!

The SYSTEM_THREAD_INFORMATION structure looks like this:

typedef struct _SYSTEM_THREAD_INFORMATION {

LARGE_INTEGER KernelTime;

LARGE_INTEGER UserTime;

LARGE_INTEGER CreateTime;

ULONG WaitTime;

PVOID StartAddress;

CLIENT_ID ClientId;

LONG Priority;

LONG BasePriority;

ULONG ContextSwitches;

ULONG ThreadState;

ULONG WaitReason;

} SYSTEM_THREAD_INFORMATION, *PSYSTEM_THREAD_INFORMATION;

There are two particularly useful fields here. The first is StartAddress, which contains the memory address at which the thread was started. For kernel and driver threads, this is the address in kernel memory space. The second useful field is ClientId, which contains the ID of the thread and its parent process.

By iterating through these structures we can group them by the thread start address and look for any group that happens to contain the right number of threads. On my VM I found two candidate thread groups:

[?] Potential target with 336 threads at address FFFFF8068EC3D010 [?] Potential target with 336 threads at address FFFFF8064F2685D0

This means we got a false positive.

For debugging purposes, I added some code to find the system module information (call NtQuerySystemInformation with SystemModuleInformation to query this) which would tell me the names, load addresses, and sizes of all modules loaded into memory on the system. This itself is a well-known VM detection technique, but it isn’t particularly stealthy because you have to look for the name of the module you’re trying to detect. This makes it obvious that you’re trying to detect VM drivers, and can be easily evaded by just renaming the driver.

The debug output tells us the identity of the false positive, but also confirms that we correctly detected the vmbus.sys driver too:

[?] Potential target with 336 threads at address FFFFF8068EC3D010 [^] Module: \SystemRoot\System32\drivers\AgileVpn.sys+0x1d010 [?] Potential target with 336 threads at address FFFFF8064F2685D0 [^] Module: \SystemRoot\System32\drivers\vmbus.sys+0x85d0

Why the Windows inbuilt VPN miniport driver needs so many threads is a question best left to performance analysts, but this tells us that our simple count-based detection heuristic is a little bit too crude.

Another reason we need a little more nuance in the heuristic is that most systems won’t have 112 logical processors – they’re more likely to have between 2 and 16. This brings us down into a much noisier realm, full of potential false positives. For example, here is the output from the same program when the VM has just four cores assigned:

[?] Potential target with 12 threads at address FFFFF8071CFEA800 [^] Module: \SystemRoot\system32\ntoskrnl.exe+0x5ea800 [?] Potential target with 12 threads at address FFFFF8050ED2D010 [^] Module: \SystemRoot\System32\drivers\AgileVpn.sys+0x1d010 [?] Potential target with 12 threads at address FFFFF8071FF985D0 [^] Module: \SystemRoot\System32\drivers\vmbus.sys+0x85d0

Clearly this simple counting heuristic isn’t good enough.

One approach to improving the heuristic would be to query system module information and calculate the thread offsets, so that we only detect threads when they’re at the exact right offset from the base of the driver module. This would work, but it’s fragile – it breaks if the driver is updated and the offset changes – and it makes it easier to see what we’re up to if someone starts debugging. You could avoid looking up the module information by detecting only the distances in memory between thread start addresses, and this may be useful for other drivers that don’t have distinct thread counts that give them away, but this will probably still break if the target driver is updated.

Thinking back to the threads that were created by the vmbus.sys driver, there were two other threads with start addresses a little lower in memory.

These extra threads always have start addresses somewhere in the 0x2000 bytes before the start address of the large group. We can use this to build a better detection heuristic:

- Dump all process and thread information.

- Walk through it and collect thread information into groups based on start address.

- Look for any group that contains three times as many threads as the number of logical CPUs.

- Look for two threads whose starting addresses are different to each other, and somewhere within 0x2000 bytes before the address from our candidate thread group.

This heuristic avoids most of the fragility issues and gets the results we were looking for:

[*] Looking for candidate threads... [?] Potential target with 12 threads at address FFFFF8071CFEA800 [^] Module: \SystemRoot\system32\ntoskrnl.exe+0x5ea800 [*] Checking nearby threads... [?] Potential target with 12 threads at address FFFFF8050ED2D010 [^] Module: \SystemRoot\System32\drivers\AgileVpn.sys+0x1d010 [*] Checking nearby threads... [?] Potential target with 12 threads at address FFFFF8071FF95090 [^] Module: \SystemRoot\System32\drivers\vmbus.sys+0x5090 [*] Checking nearby threads... [!] Hyper-V VMBUS driver detected!

This is particularly stealthy because you don’t need to include any names, offsets, or other constants that are clearly specific to a particular driver, nor do you need to access driver files, registry keys, or query the service controller or WMI. If you remove the debugging code that looks up the module address, the only API that is needed is NtQuerySystemInformation, and you only use it with parameters that are commonly used across a wide range of applications for legitimate purposes.

In order to make the heuristic even more resistant to future changes, I modified it to look for any thread group whose count was any even multiple greater than two of the logical CPU count. So, on our 112 logical CPU system, it would consider any thread group with 224, 336, 448, 560, etc. threads.

One step in this trick that I glossed over a little is detecting the number of logical CPUs on the system. While you might think that this is simple, there is a surprising amount of detail involved. Most APIs for querying the number of CPUs on the system are subject to processor group restrictions. Each process on the system has an affinity mask, which is a bitmask that specifies which logical processors its threads should be scheduled on. This affinity mask is the size of a pointer, so 32 bits for a 32-bit process and 64 bits for a 64-bit process. This is obviously problematic for systems with more than 32 or 64 processors, since a process cannot express an affinity mask that includes more than that many processors. Windows solves this using processor groups. Each process can have an assigned processor group, and the affinity mask applies to processors in that group. If you query the number of logical processors on the system, most APIs will instead tell you how many processors can be accessed by your process. In multi-socket systems the first processor group may not contain a full 32 or 64 logical processors – instead, the system is likely to distribute the logical processors among groups in such a way that offers more optimal scheduling on the system’s NUMA topology.

You could use WMI to query this information, but I’m generally not a fan of using WMI when other APIs are available. The GetLogicalProcessorInformationEx API has been around since Windows 7 and allows you to enumerate the complete topology of physical processor packages, NUMA nodes, processor groups, and cores. The simplest way to use this API to find the number of logical processors is to call it with RelationProcessorCore, iterate through the returned SYSTEM_LOGICAL_PROCESSOR_INFORMATION_EX structures, and add either 1 or 2 to an accumulator based on whether or not each core is marked as being SMT enabled.

We can put all of this together to detect Hyper-V’s VMBUS driver:

Running this on the host correctly produces negative results:

This technique can be adapted to create detection heuristics for any driver that spawns multiple threads, using a combination of offsets and counts. This is useful for detecting other VM drivers (e.g. those included with VirtualBox or VMware’s guest tools) or drivers associated with EDR or AV products.

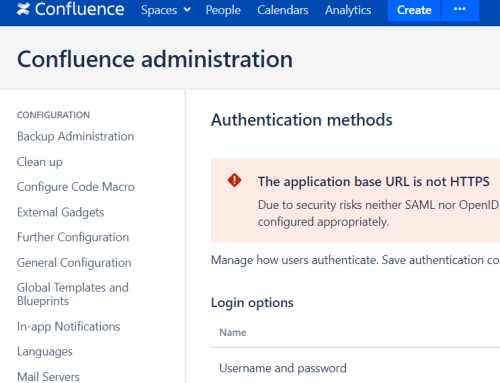

One restriction of this trick is that it doesn’t work when executing as a low integrity process:

![A screenshot of a command line window. The proof of concept program is being executed as a low-integrity process. The output is as follows: [DEBUG] Querying module information... NtQuerySystemInformation call failed with status 0xC0000022. [DEBUG] Failed to get module information. Continuing anyway. Querying process information... 10 total threads found. 0 system threads found. No system threads found. This usually occurs for low integrity processes.](https://i0.wp.com/labs.nettitude.com/wp-content/uploads/2021/03/word-image-9.png?resize=646%2C269&ssl=1)

This fails because NtQuerySystemInformation only returns information for threads in the current process when the caller has low integrity. It doesn’t allow you to get module information, either.

Just for fun, I built a version of this executable with no console output and renamed some of the functions to more innocuous names, then ran it through a number of online threat analysis tools. None of them detected anything more suspicious than the process enumeration.

Proof of concept source code can be found here.

Stay tuned for next month’s installment of 2021: A year of VM detection tricks.